Drawings made with Midjourney version 4.

Sometime this year, Apple is expected to unveil a major new product category in the form of a virtual and augmented reality headset. I’ve been thinking about what Apple may introduce based on existing products in the market, human sight limitations, and Apple’s existing processor options. My guess is that Apple’s philosophy will be to surpass existing mainstream VR headsets when it comes to visual fidelity while also not getting terribly close to life like visualizations. This assumption is based on my understanding of GPU processing and cost limitations. I also think the major question at this point is still whether or not Apple wants to create a headset that’s intended to be standalone or if they want the headset to be used in conjunction with an external computer.

Educated guesses on display specs

Human eyesight has limitations that correspond fairly well with display specs. Humans can only see so much at once (field of view), can only interpret so much light before they just see white (brightness), can only track motion with so much clarity (refresh rate), can only see so much detail (resolution), etc.

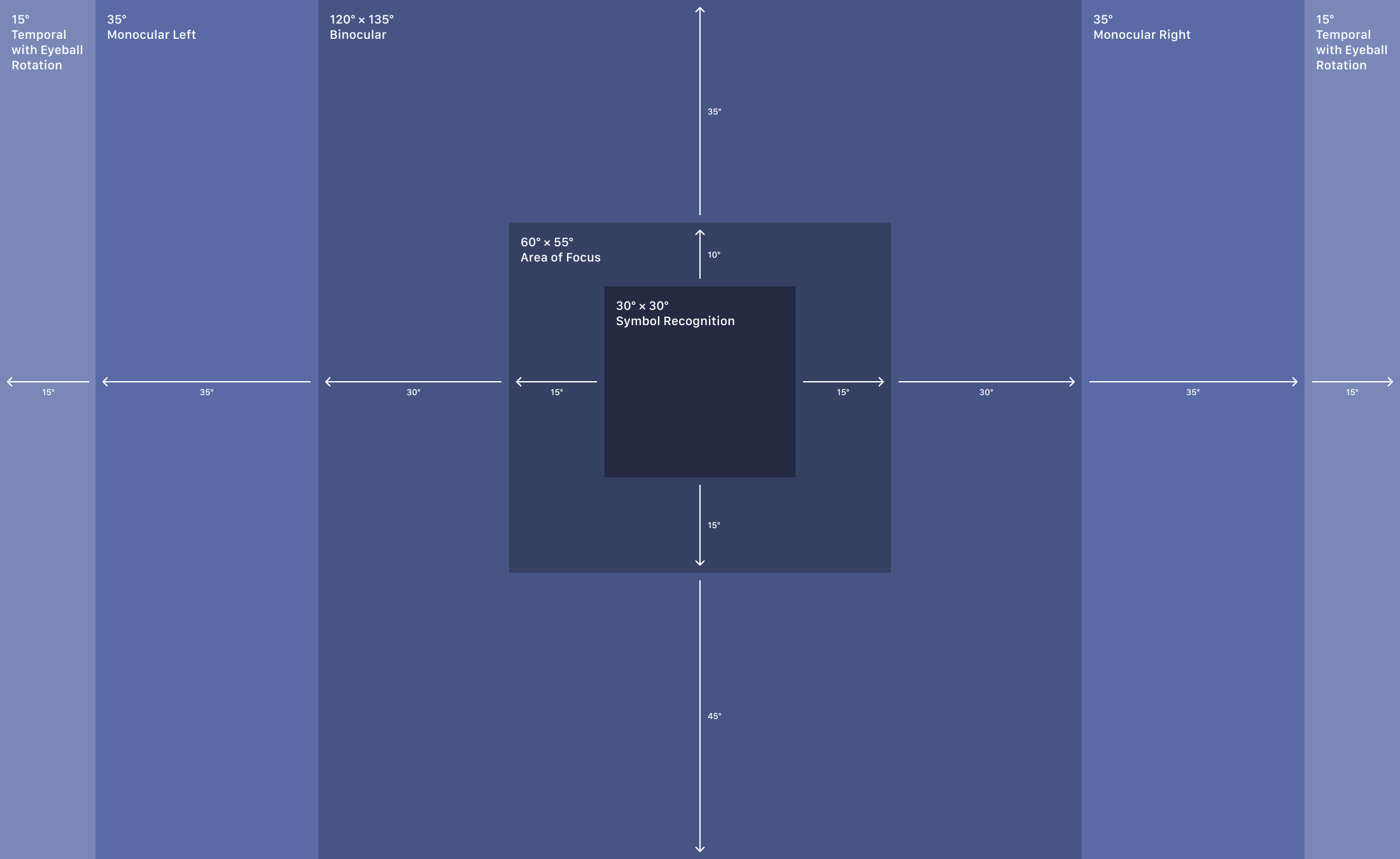

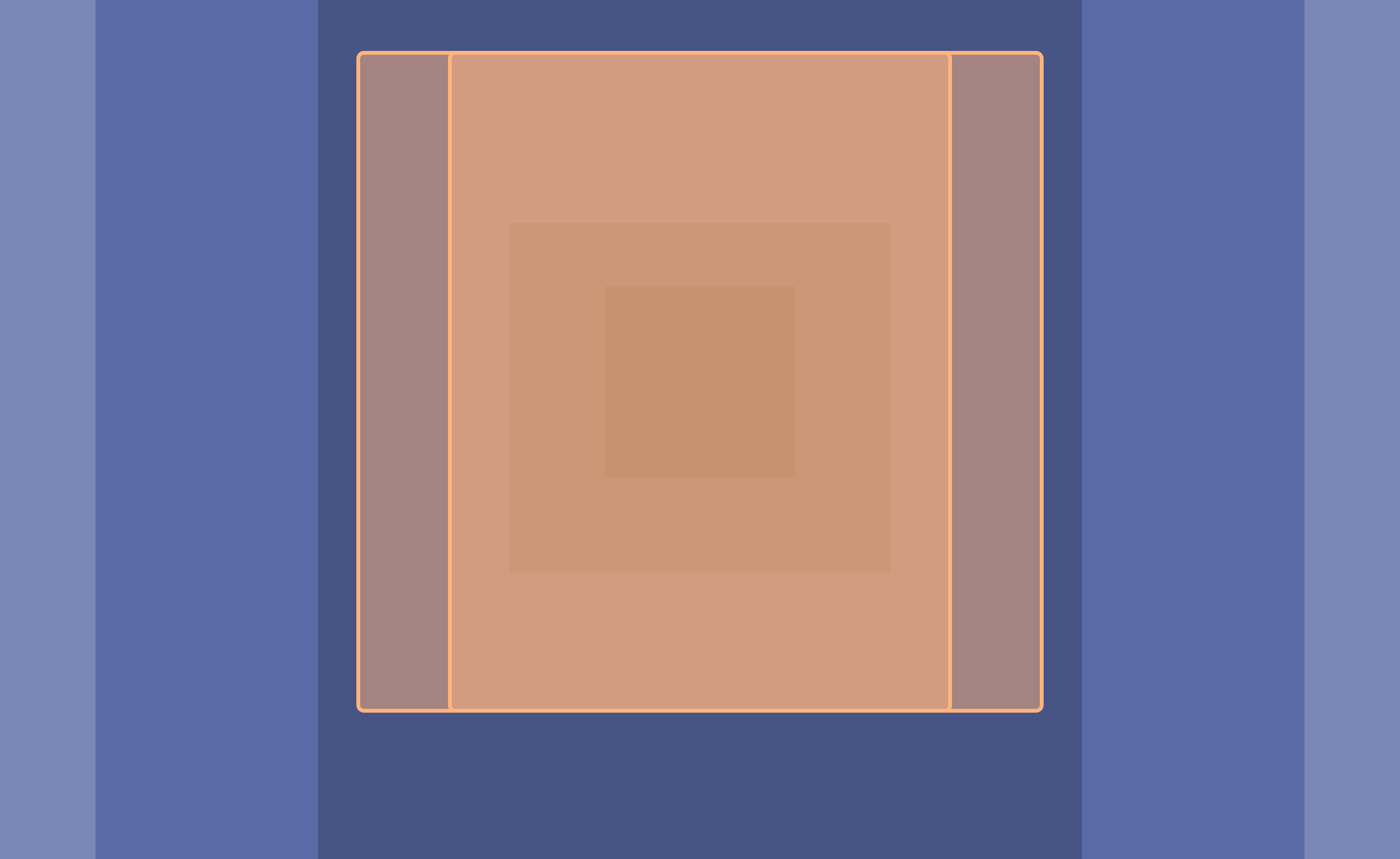

Resolution and field of view are a good place to start. The human eye is capable of seeing a field of view of about 220° horizontally and 135° vertically. I made this diagram to help illustrate how field of view is broken down by region. As you can tell by reading this sentence, it’s difficult to read other text in your field of view while looking at this word, so the center area is the most important while the outside areas provide a sense of place and context.

This diagram is based on several similar sources.

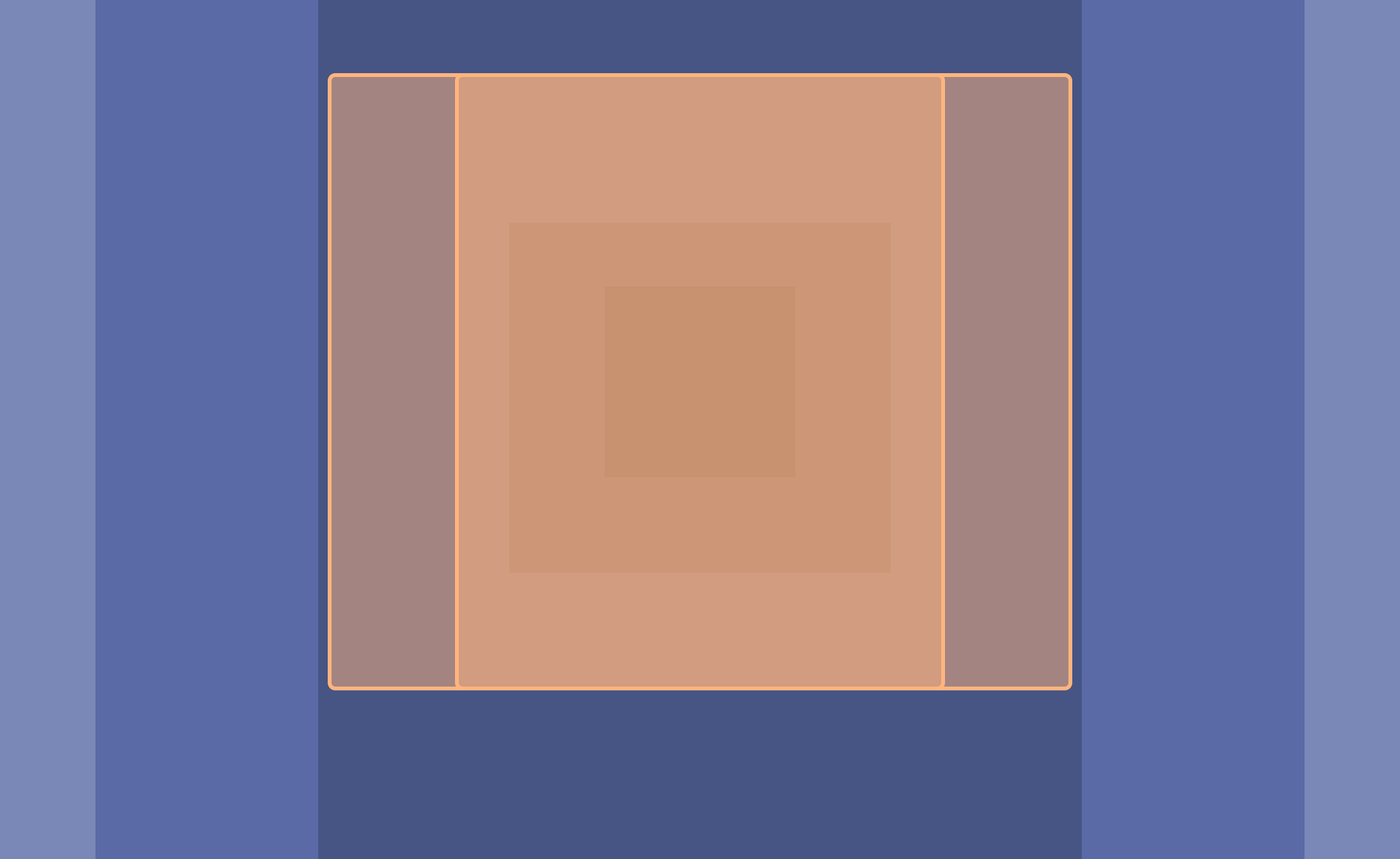

Most headsets have displays within the binocular range, having a field of view of around 100° vertically and horizontally. The two displays in the VR headset have significant content overlap between them so that the user perceives them as one stereoscopic view. Here you can see a few examples. The dark orange region in the center represents content overlap between the two displays.

My guess is that Apple will be interested in breaking out of the binocular range and start to show content in the monocular areas to the left and right. Adding this extra field of view is especially helpful for augmented reality, where the user wants to see their own physical space. This is something that’s done by StarVR so it isn’t unprecedented. Though it is worth noting that the StarVR headset has a relatively low-resolution display. Apple will have to tread into the monocular zone cautiously though since this will increase resolution and therefore performance requirements. My guess would be that Apple chooses two 1.18:1 displays spanning 130° horizontally and 110° vertically. This would place them squarely above mainstream headsets in the market today without putting extreme performance requirements on their device.

Apple’s headset could stretch into the monocular regions while staying constrained enough to be able to support a high PPD count.

The next area for Apple to consider is resolution. The human eye is thought to be able to stop perceiving additional detail after 60 pixels per degree. So in the same way that Steve Jobs presented 326 pixels per inch as retina resolution on a phone display, the Apple headset would need 60 pixels per degree to be considered retina resolution within a headset. This would be quite hard for Apple to achieve. Not just from a resolution density perspective but also from a computing performance perspective. Based on the FOV above, 60 PPD would entail having two 7,800 by 6,600 pixel displays. And these displays would need to be outputting decent graphics at a fast refresh rate.

Fortunately for Apple, no one is close to 60 PPD in the mainstream VR headset market. The Meta Quest 2 has a PPD of about 19. The HP Reverb G2, widely considered to be very high resolution, has just 24 PPD. My guess would be that Apple’s headset will have 30 PPD, allowing them to rightfully claim industry leading resolution, while also giving themselves future generations to work towards 60 PPD. There are some resolution tricks that Apple could try like having the center area of the display have a higher PPD than the rest of the display, but this would probably not result in a seamless enough experience. So the resolution for each eye would be 3,900 by 3,300 pixels. That’s about 5 million more pixels than the Pro Display XDR.

PPD Comparison

In terms of peak brightness, humans stop perceiving additional brightness after about 10,000 nits. Going as high as 1,000 nits could be harmful to your eyes after prolonged exposure. For context, the Meta Quest 2 only supports 100 nits. The iPhone 14 Pro’s screen goes to 2,000 nits to combat the brightness of the sun outdoors. The Pro Display XDR goes to 1,600 nits to show HDR content. Meta’s own “Starburst” prototype went all the way to 20,000 nits, but it’s hard to imagine that being necessary or practical for quite some time. My guess would be that peak brightness for Apple’s headset would probably sit somewhere around 500 nits, with features dedicated to dimming the display in order to avoid prolonged exposure to damaging light. I also wouldn’t be surprised if very bright light, up to around 1,000 nits, might be reserved for an AR mode, where the user could be viewing bright lights in their surroundings. I hope that Apple uses either OLED or microLED for true blacks and lower latency.

One other important spec that will impact overall device performance is refresh rate. Mainstream refresh rates for headsets are between 90 Hz and 144 Hz. With refresh rate, I think Apple will aim for ProMotion-level performance which is 120 Hz. This would place them squarely above the HP Reverb and on par with the HTC Vive Pro 2 and Meta Quest 2. Only the Valve Index and Pimax headsets have refresh rates of more than 144 Hz. Similar to resolution, a 120 Hz refresh rate would allow Apple to market the headset as having ProMotion, and set themselves up for another brand when they move to higher refresh rates in future headset generations. It’s worth noting that the human eye isn’t thought to be capable of perceiving refresh rates higher than 240 Hz, so this is another situation where Apple will need to eventually double a spec of the display which will result in much higher GPU performance requirements.

Refresh Rate Comparison

There are other aspects of the headset like being able to focus on specific objects, or having more rendering performance pushed to 3D objects being looked at, but those have less to do with the limitations of human vision and more to do with the basic technology that will need to be built into the headset and its operating system. So for now, I’d like to focus on the performance required to drive these displays. What chip might Apple use for their headset?

Apple silicon to the rescue

To take a step back, it’s worth explaining how I’m thinking about performance. The display would essentially have a resolution of 7,800 by 3,300 pixels, refreshed 120 times every second. This could also be framed as being 3,088,800,000 pixels per second, or 3,089 megapixels per second (MPPS). So this gives us what the display is capable of presenting, but it doesn’t tell us how that MPPS number will impact graphical rendering performance. In other words, if the resolution and refresh rate were lower as they are with Apple’s competition, presumably the GPU would be able to use those freed up resources to render higher quality graphics.

MPPS Comparison

In order to judge how Apple’s chips would fair at rendering content to the 3,089 MPPS display, we need a point of comparison. The recently released HTC Vive XR Elite is a good baseline. We can use it’s MPPS and 3DMark Wildlife Extreme benchmark score to compare the Vive XR Elite’s Adreno 650 GPU with Apple’s A and M series GPUs. The Vive XR Elite has two 1920 by 1920 pixel displays with a 90 Hz refresh rate, meaning that it’s display renders at 664 MPPS. The Adreno 650 has a 3D Mark score of about 1,200. If you divide its 3D Mark score by its MPPS, you get 1.84. This number can be considered a performance baseline for Apple GPUs needing to render at 3,089 MPPS. In other words, if an Apple GPU has a 3D Mark score of about 5,700, it can be expected to perform about as well as the Adreno 650 when it comes to rendering 3D content. This is very back-of-the-napkin math, but I think it gives at least a relative comparison between what users could expect from modern VR headsets versus what Apple could make with their current chips when accounting for much higher resolution and refresh rate.

The iPhone 14 Pro’s A16 has a 3D Mark score of about 3,200, meaning it could be expected to underperform the Vive XR Elite when having to render at the much higher MPPS. The iPad Pro’s M2 has a score just below 5,700 so it could be expected to match the performance of the Vive XR Elite.

But this approach is definitely grading the Vive XR Elite on a curve, because of it’s lower MPPS. For an apples to apples comparison, it would be better to see what the graphics performance would be if the Vive XR Elite’s Adreno 650 GPU needed to support the Apple headset’s MPPS. Indeed, we can actually compare across several different GPUs on the market to get a sense of how their MPPS might impact performance, and where the best GPU pick would be on this spectrum.

Graphics Comparison

This chart begs a very important question. How much power does Apple want their headset to have? Integrating an M2 processor - the equivalent of the iPad Pro’s guts - into a VR headset would be straightforward and unlock breakthrough performance relative to other headsets in the industry, enabling a generational step in FOV and resolution. But when compared with the rendering power of an Nvidia 4090, an Apple headset powered by an M2 would still be squarely in the mobile performance category when it comes to 3D visuals quality.

If Apple wants to enable more true-to-life experiences, it would be quite difficult for them to rely on a very low power and fan-free design. When attempting to incorporate an M2 Pro or M2 Max chip, they might also get into a “runaway weight” problem where the devices battery and thermal management system renders the headset too cumbersome to have on your head for more than a few minutes.

If Apple wants to enable higher fidelity graphics, it’s inevitable that the headset would need to connect to an external computer, where size, weight, and power would be relatively unconstrained. Based on price, this would likely result in the M2 Max being the ideal pick.

If Apple wants their headset to be a standalone device, then either the A16 or the M2 are the likely candidates. But there is another approach that could give users - and engineers at Apple - the best of both worlds.

Please take the computer off my head

If Apple were to utilize the M2 Max for their headset, and they modularized the computer from the core headset technology, one has to wonder where the computer would go. Of course, Apple could follow others in the market and simply have a long cable that runs to a Mac on a desk. This is what the recently released PSVR 2 does as well. But tripping over a wire probably isn’t Apple’s desired approach. There is reporting that at one point Apple was exploring having a computer in the user’s vicinity while beaming data wirelessly to the headset. That idea was apparently scrapped. Honestly it’s hard to understand how that would have worked given the requirements for low latency and high refresh rates in a headset.

Instead, I think having the computer on the user’s back in a backpack form-factor would have a clear mobility advantage while leaving the computer unconstrained relative to other standalone headsets. If the backpack is 2 lbs, and the computer is 4 lbs (and that’s a few ounces heavier than Apple’s heaviest laptop), the entire package would be just 6 lbs. In all likelihood, the computer-backpack could be lighter given that having a display, keyboard, and speakers would not be necessary.

Beyond processing power, a computer-backpack could also have additional cameras and sensors to help track a user’s body movement and surroundings. This would allow the headset to only integrate the bare minimum number of cameras and sensors.

In a world where a user can connect their headset to a computer-backpack, the headset itself could also be a limited standalone headset. It could have a small processor and battery intended for supporting basic tasks for a short period of time. For example, the headset could have an A16 chip. In a standalone mode, the headset could lower its max frame rate to 90 Hz and limit the field of view to 100° by 100° degrees. This would lower the headset’s MPPS to just 1,620, allowing its performance score to sit at about 2 which would be just above the M2 without those limitations. The A16 would be more than capable at powering the headset for quick tasks where the user doesn’t want to bother with the backpack. Because this mode would just be for quick actions, the battery on the device could be small, only intending to power the headset for up to 1 hour. Also, having the A16 offload some tasks from the main external GPU would also help to push graphical performance a bit further.

So this would leave Apple with a couple of products that would be a part of a virtual reality system.

Headset: Apple Reality Pro

Computer-backpack: Reality Pack Pro

Computer-backpack for Macs: Reality Pack for Mac

These products could be purchased independently or as a bundle a la the Pro Display XDR and its very pricey stand. The Reality Pack Pro would have a few configurations that focus on improving overall performance. The Reality Pack for Mac would have specific variations for the MacBook Pro, Mac mini, and Mac Studio.

Designing for the long term

This arrangement of having a light-as-possible headset on the user’s head and a quite-light computer on the user’s back could have longevity for quite some time. Thinking back to the beginning of this post, remember that a display that starts to match the abilities of the human eye would be extraordinarily demanding. It would have a field of view of at least 220° by 135°, a resolution of at least 10,200 by 8,100 pixels for each eye, running at 240 Hz. That’s about 39,700 MPPS. And that’s before we get to improved focusing, brightness, color, hand tracking, battery life, and all the rest. By my math, this kind of human-eye level headset would need a processor with a 3D Mark score of about 75,000 just to have the graphical rendering capabilities of the Adreno 650. For reference, the M1 Ultra (currently Apple’s best chip) has a score of just 35,000.

My hope is that Apple decides to let users take advantage of their already-purchased Macs for their headset as well. If you’ve already spent $5,000 on a MacBook Pro, it would be great if Apple could just sell you a $500 backpack that includes a specific case for the computer along with proper wiring for the headset and integrated cameras and sensors for tracking. The same even goes for the Mac Studio, where having a large battery bank could allow a user to render using the M1 Ultra, though Apple would be getting close to 10 lbs, where users would start to feel the weight.

With this componentised approach, Apple would also be able to continue developing the headset itself to be more capable with each generation. Eventually, the need for a separate computer-backpack would fall off, and the headset would start to take on more of the computing, battery, and sensor responsibilities. But, in my opinion, lightness and comfort should always be the leading attributes. It remains to be seen how Apple will put together their headset, and what features and benefits they will prioritize. But I for one am at the edge of my seat waiting to see what they come up with for the first generation product and beyond.